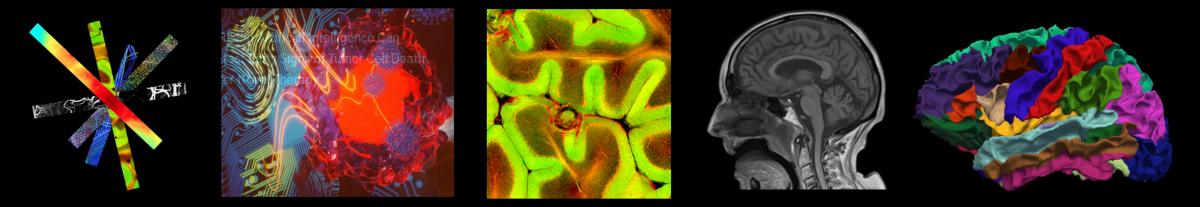

Last year, a published deepMRAC study in Radiology evaluated the feasibility of deep learning-based pseudo-CT generation in PET/MR attenuation correction, in which our AI team demonstrated the pseudo-CT generated by learning MR information could significantly improve PET reconstruction in PET/MR, leading to less than 1% uncertainty in brain FDG PET quantification. We investigated the feasibility, applicability, and robustness of deep learning-based pseudo-CT generation in MR-guided radiation therapy to seek the clinical value of deep learning-based pseudo-CT generation. With the new method published as deepMTP framework, we demonstrated the high clinical value of deep learning pseudo-CT in radiation therapy for saving radiation dose and providing high-quality treatment planning equivalent to the standard clinical method.

We have shown that deep learning approaches applied to MR-based treatment planning in radiation therapy can produce comparable plans to CT-based methods. The further development and clinical evaluation of such approaches for MR-based treatment planning have potential value for providing accurate dose coverage and reducing treatment unrelated doses in radiation therapy, improving workflow for MR-only treatment planning, combined with the improved soft tissue contrast and resolution of MR. Our study demonstrates that deep learning approaches such as deepMTP will substantially impact future work in treatment planning in the brain and elsewhere in the body.

In addressing the challenge of creating a generalizable deep learning segmentation technique for magnetic resonance imaging (MRI), the

In addressing the challenge of creating a generalizable deep learning segmentation technique for magnetic resonance imaging (MRI), the