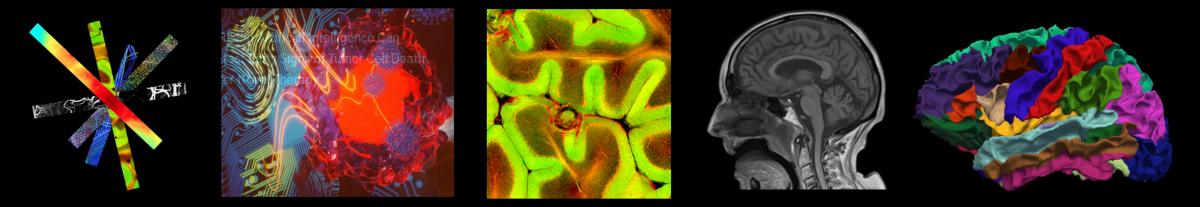

Over the past few years, machine learning has demonstrated the ability to provide improved image quality for reconstructing undersampled MRI data, providing new opportunities to improve the performance of rapid MRI further. Compared to conventional rapid imaging techniques, machine learning-based methods reformulate image reconstruction into a task of feature learning by inferencing undersampled image structures from a large image database. Such a data-driven approach has been shown to efficiently remove artifacts from undersampled images, translate k-space information into images, estimate missing k-space data, and reconstruct MR parameter maps. Many pioneering works from various research groups have greatly impacted the medical imaging reconstruction community. Now, our artificial intelligence team invented a new method to further advance machine learning-based image reconstruction by rethinking the essential reconstruction components of efficiency, accuracy, and robustness. This new framework, named Sampling-Augmented Neural neTwork with Incoherent Structure (SANTIS), was recently accepted for publication in Magnetic Resonance in Medicine.

| Hammernik et.al. | New York University/Graz University of Technology | Variational Network |

| Wang et.al. | Paul C. Lauterbur Research Center for Imaging | End-to-end CNN |

| Zhu et.al. | Harvard University | AUTOMAP |

| Schlemper et.al. | Imperial College London | Cascade Network |

| Han et.al. | Korea Advanced Institute of Science Technology | Domain Adaptation Network |

| Akçakaya et.al. | University of Minnesota | RAKI |

| Mardani et.al. | Stanford University | GANCS |

| Eo et.al. | Yonsei University | KIKI-net |

| Biswas et.al. | University of Iowa | MoDL-SToRM |

| Quan et.al. | Ulsan National Institute of Science and Technology | Cyclic Network |

| Liu et.al. | University of Wisconsin | MANTIS |

Our SANTIS framework uses a unique recipe of data cycle-consistent adversarial network combining efficient end-to-end convolutional neural network mapping, data fidelity enforcement, and adversarial training for reconstructing undersampled MR images. 1) The reconstruction efficiency was ensured by applying an end-to-end convolutional neural network that directly removes image artifacts and noises using highly efficient multi-scale deep feature learning. Many modern convolutional neural network designs provide flexibility for network selection and implementation. 2) The reconstruction accuracy was maintained by the adversarial training using an additional adversarial loss and a data consistency loss. The reconstructed images represent a natural-looking appearance with high-quality preservation for image texture and details at a vast undersampling rate. 3) The reconstruction robustness was enforced by introducing a training strategy employing sampling augmentation with extensive variation of undersampling patterns. The trained network can learn various aliasing artifact structures, thereby removing undersampling artifacts more faithfully.

SANTIS was evaluated to reconstruct undersampled knee images with a Cartesian k-space sampling scheme and undersampled liver images with a non-repeating golden-angle radial sampling scheme. SANTIS demonstrated superior reconstruction performance in both datasets and significantly improved robustness and efficiency compared to several reference methods. We believe SANTIS represents a novel concept for deep learning-based image reconstruction and may further inspire the MRI value by allowing improved rapid image acquisition and reconstruction, one of the goals of our research team.

In addressing the challenge of creating a generalizable deep learning segmentation technique for magnetic resonance imaging (MRI), the

In addressing the challenge of creating a generalizable deep learning segmentation technique for magnetic resonance imaging (MRI), the